As brands and developers increasingly use OpenAI’s powerful resources in their operations, a common challenge they face is accurately estimating their ChatGPT API cost. The AI integration framework doesn’t follow a one-size-fits-all pricing strategy. Instead, ChatGPT API costs tend to fluctuate based on factors like token usage, request frequency, and the endpoints accessed.

Automated ChatGPT API price calculators exist for getting quick estimates. But they often lack the granularity needed for precise budgeting. The calculators operate on average values and standard use cases, potentially overlooking the unique requirements of individual businesses. For instance, a company using ChatGPT for content creation might have different token consumption patterns compared to a business using it for tech support chatbots. 👨🚀👨🚀

Because of all those complexities, we’ve taken it upon ourselves to demystify the ChatGPT API cost structure based on your specific technical requirements. ⚙️

📚 Table of contents:

ChatGPT API pricing structure

The ChatGPT API serves as a bridge to OpenAI’s most advanced language models, GPT-4 and GPT-3.5 Turbo.

The two AI models train on vast amounts of data to interpret and produce text with human-like patterns. By integrating any of them into your applications, you’ll get an intelligent text generator that responds contextually to user inputs.

Every text string, whether a question or an answer, gets segmented into units called tokens. In English, a token might be as short as a character or as long as a word. Your total token count across both input and output strings is what ultimately determines the ChatGPT API cost and response time.

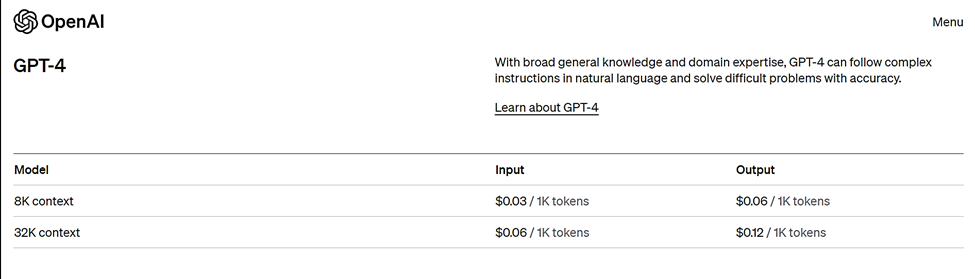

The GPT-4 API itself comes in two context limits—8K and 32K. The 8K version can handle roughly 8,000 tokens, while the 32K version supports the input and output of about 32,000 tokens.

The 8K model supports in-depth conversations and detailed content drafts. And for that, you’ll pay $0.03 for every 1,000 input tokens and $0.06 per 1,000 output tokens.

The 32K version offers a much larger content window, which bumps up the ChatGPT API cost to $0.06 per 1K input tokens and $0.12 for every 1K output tokens.

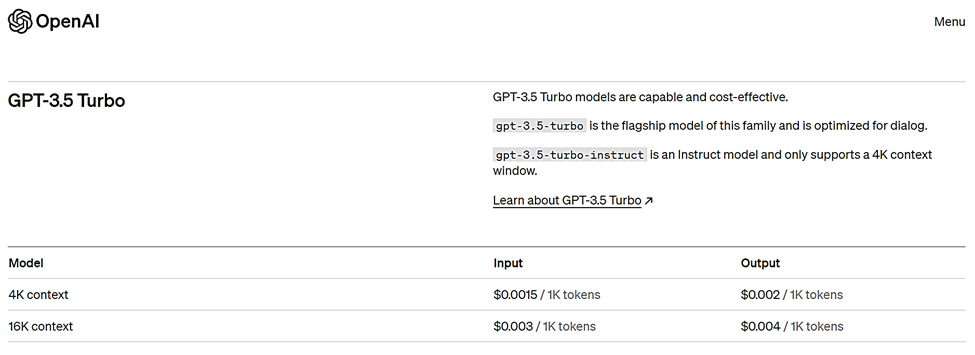

GPT-3.5 Turbo API, on the other hand, boasts 4K and 16K variants.

The 4k variant (supporting about 4,000 input and output tokens) works for basic dialog applications; OpenAI charges you $0.0015 per 1,000 input tokens and $0.002 for every 1,000 output tokens.

For extended dialogues and instruction-based replies, you could go with the 16K context model for $0.003 per 1,000 input tokens and $0.004 for the same volume of output tokens.

What is the good thing about this token-based pricing structure? It lets users adjust their API usage according to their operational demands. Whether you’re a budding startup or a large-scale enterprise, you only pay for what you use.

You might, however, find it difficult to estimate token usage in the beginning—especially if you use the ChatGPT API on dynamic applications with diverse user inputs.

Once you learn the ropes, though, you’ll be able to define your average token usage with precision. Budgeting becomes simpler, and you get to adjust ChatGPT API cost based on the projected interactions.

Common ChatGPT API integration use cases and their costs

Below, we go through common use cases of the ChatGPT API integration and what you might expect to spend with each.

Content generation 💡

The ChatGPT API, with its automated language models, continues to redefine the entire content creation pipeline.

As a blogger, for instance, you could set up prompts that guide the model to produce posts on various topics while maintaining a consistent structure and tone. A standard article of 800 words would take up about 4,000 output tokens, bringing the ChatGPT API cost to approximately $0.24 on the GPT-4 8K model.

Social media content, on the other hand, is typically shorter but requires more creativity and context awareness. Your AI content writer integration should be focused on generating short, engaging snippets that resonate with the target audience.

The output can be in the form of a 280-character tweet or Facebook post, both of which the GPT-3.5 Turbo 4K model can produce. Each instance would add up to about 140 tokens, translating to a bill of $0.001 per post.

Another area where the GPT-3.5 Turbo 4K model could work is writing product descriptions for ecommerce platforms. A single product page may need 100 words or 500 tokens, meaning your AI content generation budget should be at least $0.001 per description.

Powering web chatbots 👾

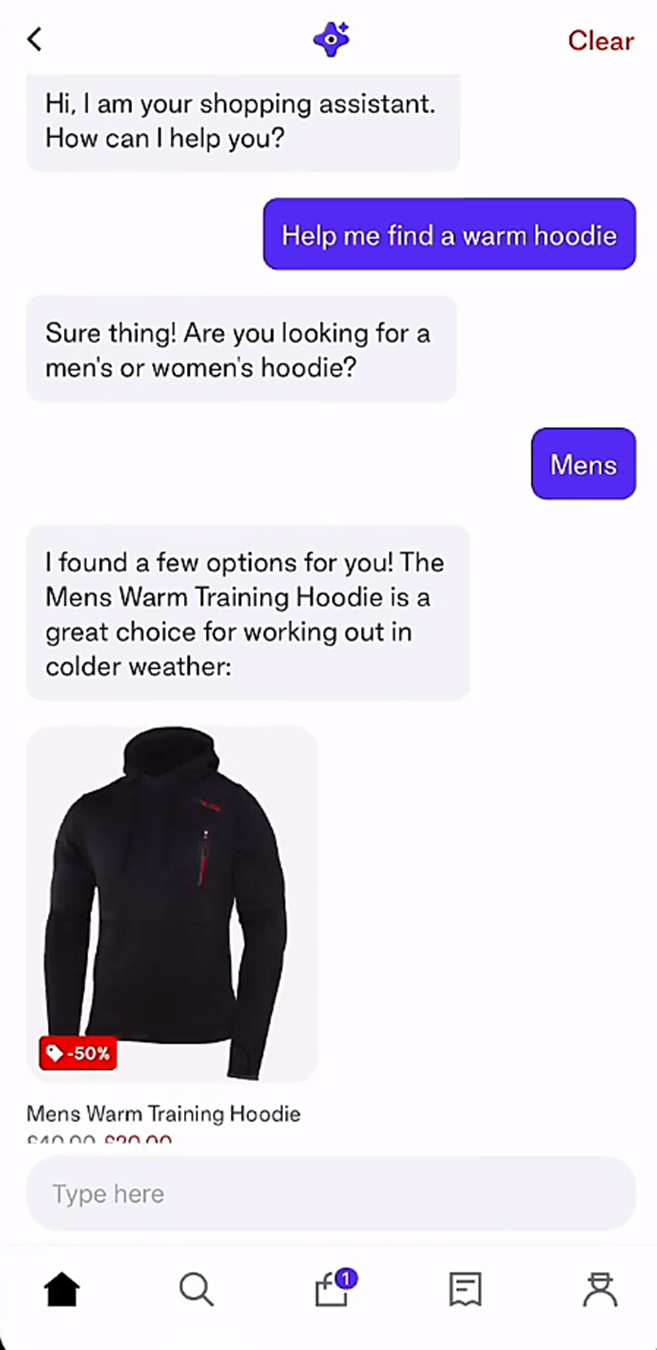

To integrate ChatGPT into chatbot platforms, developers are supposed to set up API calls between their application and OpenAI’s servers. User requests are relayed in real time from your chatbot to the API, which then returns contextually relevant responses.

For ecommerce platforms, such AI chatbots can assist site visitors by recommending products, detailing product features, or processing returns.

Assuming an average of five interactions per web visitor (with each being 20 tokens long), a single session would consume about 100 tokens on GPT-4 8K. So, for a business with 1,000 interactions per day, token usage could stretch to 100,000. That amounts to a daily ChatGPT API cost of $9: $3 for input and $6 for output instances.

Businesses can also use AI chatbots to collect feedback from customers. To minimize costs, though, you could set up the chatbot to manage the initial interactions independently and only consult ChatGPT for more complex, open-ended queries.

If each user provides five sentences (15 tokens each), a single piece of feedback would be 75 tokens long. 200 instances per day should therefore take up about 15,000 tokens. That means with the GPT-3.5 Turbo 4K model, your daily ChatGPT API cost would be about $0.0225 for input and $0.03 for output.

Customer support automation 🦾

OpenAI’s ChatGPT has become popular in online customer service due to its ability to handle a large volume of inquiries simultaneously. By channeling requests through its API, businesses can have a support system that answers routine questions and engages in deeper conversations.

For instance, businesses can automate email responses to common queries. To achieve this, they’d train ChatGPT on a dataset of past customer interactions, covering both queries and the support agents’ responses. The AI would subsequently be able to identify recurrent issues and generate appropriate email responses.

If each interaction consumes about 200 tokens and ChatGPT processes 500 such emails daily, your company’s total volume would be 100,000 tokens. The ChatGPT API cost for using the GPT-4 8K model amounts to approximately $9 per day.

The ChatGPT API additionally allows you to integrate voice assistants with your company’s phone system. Start by training the AI model on both textual and auditory data, after which it will guide the voice assistant to evaluate callers’ queries and deliver pertinent responses.

Assuming each call uses up about 300 tokens, 300 phone exchanges per day add up to 90,000 tokens. The resulting daily cost of the ChatGPT API would be approximately $8.10.

Additional costs you might incur from ChatGPT API

To maximize the value of your AI integration, you should try to understand even the secondary ChatGPT API costs that come with it. You incur these expenses indirectly through the resources supporting your ChatGPT operations.

The main ones include:

1. Infrastructure 🚧

While the API itself is hosted on OpenAI’s servers, your application might require additional resources to handle the increased load, especially when user interactions surge. This could mean investing in more robust servers or scaling up cloud services.

For instance, if you’re using AWS (Amazon Web Services) or Google Cloud, you’d need to factor in these costs: compute instances, storage, and potential load balancers.

2. Data transfer 🔁

Data transfer, especially in cloud environments, isn’t always free. When your application sends out a request to the ChatGPT API, data egress (an outgoing traffic flow) occurs. What follows is a response from the API, which transfers to your system in packets called ingress.

Whereas ingress is often free, egress data can be costly in large volumes. For example, AWS charges for information leaving its servers. If your application makes 10,000 API calls daily, with each transferring 50 KB of data, you’re looking at 500 MB of egress daily.

3. Security and compliance 🔒

Any sensitive data processed through your system should include end-to-end encryption. This demands protocols like TLS 1.3 for data in transit and services like AWS’s KMS for data at rest.

Additionally, businesses in sectors like healthcare or finance must check to confirm that their ChatGPT API integrations are in line with industry regulation standards. You might need to set up specialized data protection measures and conduct regular compliance audits.

Top tips for optimizing ChatGPT API costs

As businesses scale and user interactions grow, even minor inefficiencies in your API architecture can lead to significant cost increases. Thankfully, you have several strategic ways to optimize your ChatGPT API expenditure without compromising its efficacy:

1. Cache responses 💾

One effective way to reduce ChatGPT API costs is by caching frequent responses. If users often ask similar questions, there’s no need to query the API repeatedly. You should, instead, implement caching mechanisms like Redis or Memcached. They’ll store common responses and serve them directly to users, thus reducing the number of API calls and speeding up response times.

Redis itself stores data as key-value pairs, which then facilitate rapid data retrieval. If a user query is hashed to a specific value, the hash can be used as a key to bypass API calls and retrieve the cached response.

2. Trim text inputs ✂️

Every token string contributes to your ChatGPT API cost. That is reason enough to minimize the number of characters per request.

You could, for instance, set the system to pre-process user inputs and:

- Remove redundant spaces or characters.

- Use abbreviations where the context allows.

- Strip out non-essential parts from queries.

Case in point—if a user asks, “Can you tell me about the return policy?”, pre-processing can trim it to “Return policy?”. That would effectively reduce token usage.

3. Capitalize on OpenAI’s Tiktoken 🅱

OpenAI has built a Python library called Tiktoken to help businesses estimate the number of tokens in a text string without making an API call. You can thus integrate it into your application’s backend to gauge token usage beforehand.

This should help in deciding whether to proceed with the API calls or handle the query differently. Plus, you get to implement and track ChatGPT API cost restrictions.

4. Track and set alerts 🚨

Regulating API usage becomes especially easy when the decisions are data-driven. You can use platforms like Grafana or AWS CloudWatch to gain real-time insights into your API call frequencies and usage patterns.

They’ll even send you alerts when the ChatGPT API cost approaches your budgeted limit.

5. Re-evaluate and adjust 🔧

As technology and user behaviors shift, you need to review your ChatGPT API integration periodically to find new cost optimization opportunities. This might mean tweaking your caching approach, updating preprocessing guidelines, or even switching to a more cost-effective GPT model variant.

Final thoughts 🏁

The value you stand to derive from your ChatGPT API cost depends on multiple factors.

If your application demands extensive context and you’re willing to pay a premium price, GPT-4 is the way to go. Its 32K variant is especially ideal for tasks that require sophisticated outputs.

If, on the other hand, you’re looking for a balance between performance and cost, we’d recommend GPT-3.5 Turbo. It’s versatile and can handle many tasks without straining your budget.

As you make the choice, remember to also consider the extra expenses that come with the ChatGPT API. You need to account for infrastructure, data transfer, security measures, and all their accompanying bills.

With the right strategies, though, you should be able to optimize all those ChatGPT API costs. You can proceed to cache responses, minimize text inputs, and estimate costs with tools like Tiktoken.

But don’t stop there. As AI technology progresses, so will strategies to leverage its power optimally. Here are some guides to help you keep up with them:

Let us know if you have any questions on what ChatGPT API cost really is and how to best navigate it to not pay as much.